Let’s begin by stating the obvious: Testing microservices is hard. The inherently distributed nature introduces dependencies on downstream services (i.e. other services to which requests are made to), and setting these dependencies up locally can be taxing (pull the recent code, run migrations, create the necessary data, and of course, recurse for transitive dependencies).

Our current infrastructure (based on Kubernetes) attempts to make this easier by allowing forwarding of local ports to services hosted in a “staging” cluster. Although this enables developers to test their changes without setting up downstream services locally, it introduces a new necessity: The need for a highly available staging environment! Furthermore, port forwarding also doesn’t help when we do integration testing with upstream services (i.e. services that make requests to the microservice being tested).

A blip in any one microservice could potentially block integration testing workflows of all upstream services (i.e. services that make requests to the microservice experiencing downtime). This can be particularly troubling if a “frequently used” service sees a downtime. For instance, if the user service is down, no person can log into the staging mobile app, rendering the whole setup unusable.

Essentially, to ensure that integration testing is never blocked, the staging environment should be sacrosanct; it should host reliable services that mimic production behaviour. Unfortunately, this was not the case for us since our staging environment also serves as the first stop for integration testing in a “production-like” environment. The testing workflow usually involves merging the development branch to the staging branch and then deploying it to the staging cluster.

Since the staging cluster is usually the first place where local changes are integration tested, we do see erroneous commits getting merged and deployed, destabilising it. This also means that merge conflicts are routine; and we all know how we feel about them 😃. Sometimes changes even end up being overwritten, increasing confusion and decreasing developer productivity.

Our motivation behind a testing tool was twofold: (i) to make the staging environment more reliable and (ii) to save time spent on merging and resolving conflicts. Both of these can be achieved by addressing one root issue: A typical microservice environment hosts only one "version" of a microservice at any given time, which is derived from the “stage” branch. If we give the tech team a way to deploy their development branches in parallel as well, they can test their changes independently! Not only will this reduce the number of bad commits pushed to the stage branch, but this will also save time that otherwise would have gone in resolving merge conflicts.

What are Virtual Clusters?

After speaking with various teams and gathering requirements, we conceptualised the notion of a “virtual cluster” (VC). A VC can be thought of as a parallel environment residing within the staging cluster, capable of (i) hosting multiple microservices and (ii) communicating with other services in the staging cluster. All environment configurations remain identical to the staging environment. The following points summarise the behaviour we sought out to achieve.

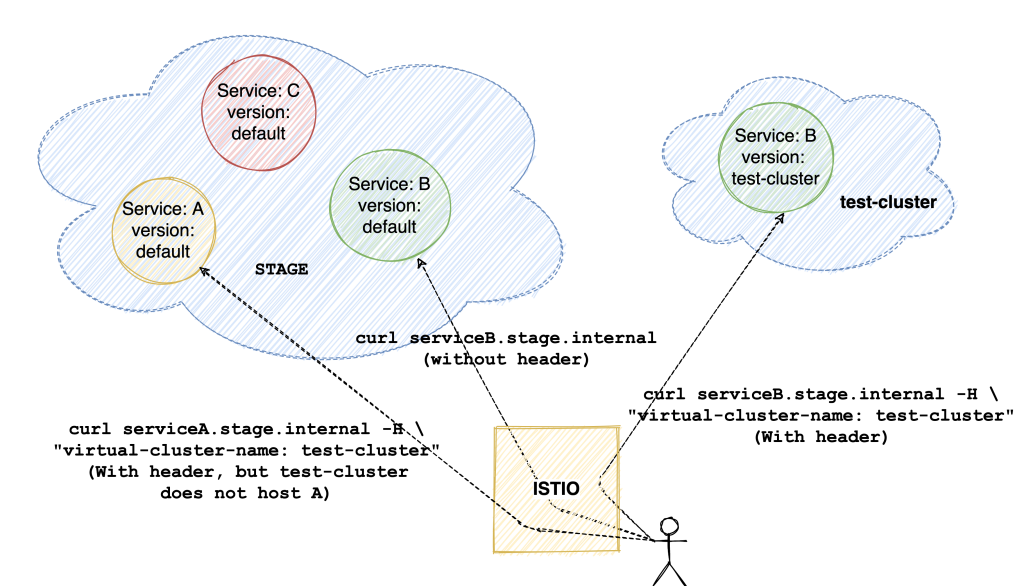

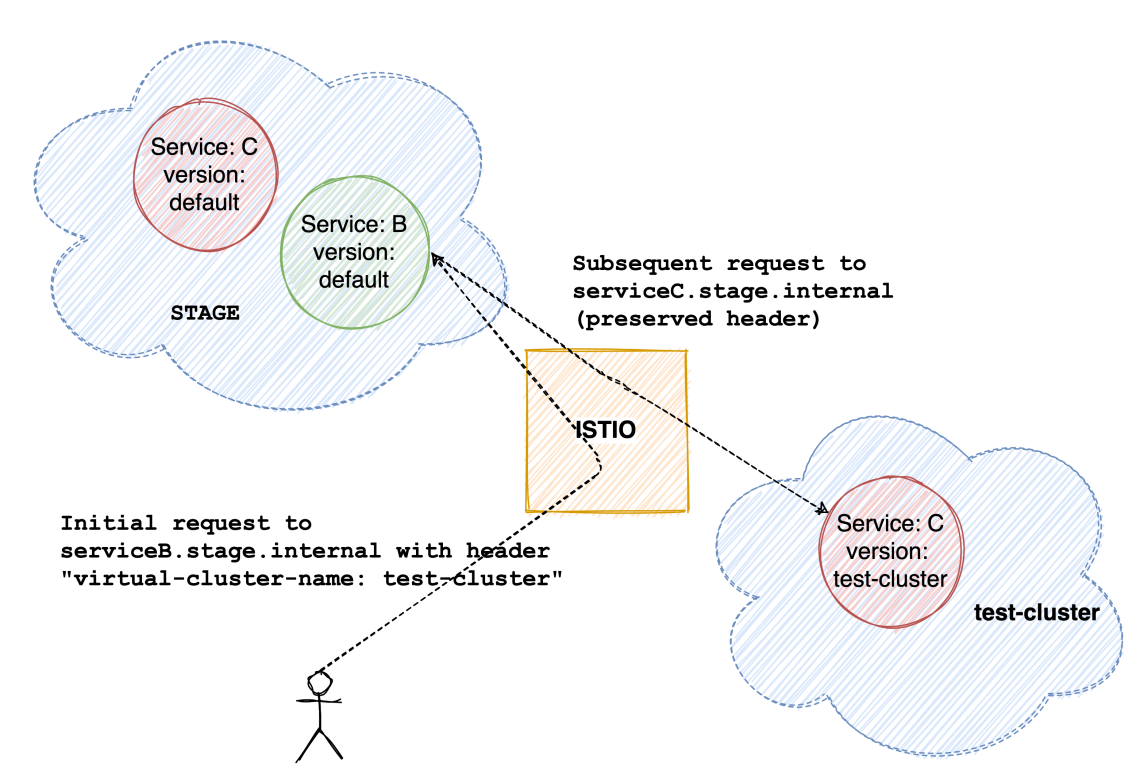

- We should be able to make requests to the VC (and the services it hosts) in an easy manner (we did this by just injecting a header in the existing requests made to the staging version of the service)

- A “service miss”, i.e. a request made to a VC intended for a service not hosted in the VC, should be handled correctly. Such requests should get routed to the stage version of the intended service. This solves the problem of deploying every single application to a VC

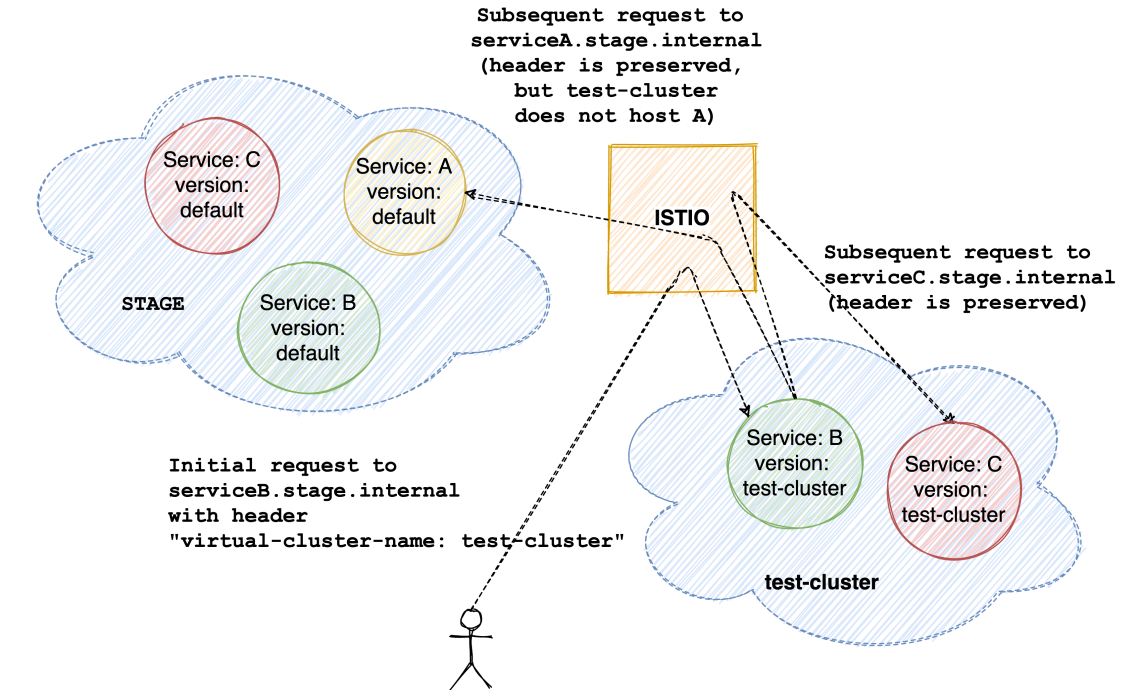

- Downstream requests from a service in a VC should be routed to services within the VC itself. That is, if serviceB in a VC calls serviceC, the request should go to the version of serviceC hosted in the same VC

- In the above case, if the VC doesn’t host the downstream service (i.e. the VC does not host serviceC), the request should go to the “default” stage version

- Downstream requests made by default stage versions of services as a result of a request containing the virtual-cluster-name header should also be routed to the correct VC.

Our current staging infrastructure

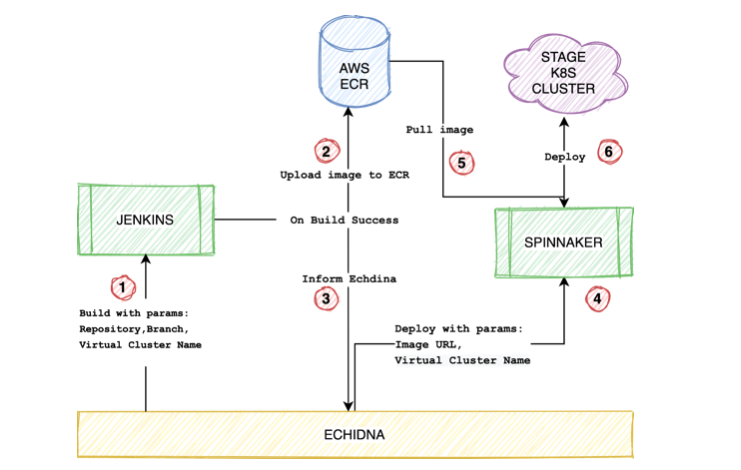

Since our concept of virtual cluster builds upon the current staging infrastructure, it’s important that we spend a few minutes understanding it. A TL;DR version — we use Jenkins to build images; each microservice has its own multibranch pipeline job. The final phase of the build involves uploading the built image to AWS ECR, and triggering a Spinnaker pipeline to deploy this image to our stage Kubernetes cluster.

Within our cluster, each microservice has its own namespace, which hosts quite a few different resources pertinent to the microservice. For example, the microservice Deployment, some ConfigMaps, autoscaling rules (HPA), and so forth. We maintain each of these resources as Helm charts, which get evaluated into k8s manifests as a part of the Spinnaker pipeline (Bake). Subsequently, these manifests are applied onto the cluster (Deploy).

Building a Proof of Concept

We broke down our problem into the following subproblems:

- Build: Set up a workflow to build any branch of a given microservice, and upload it to AWS ECR

- Deploy: Deploy the built image onto the stage cluster

- Route: Conditionally route traffic to the new deployment based on certain headers in the request

Building branches

At the core of our solution is the ability to build and deploy any branch. Solving this part was pretty effortless — we simply created a new pipeline that is configured to take the following parameters:

- repoName: The application repository to clone from

- branchName: The branch to build

- virtualClusterName: The virtual cluster to deploy to

The Jenkinsfile itself is picked up from the git repository of the service, so no service specific changes are required to be made in the pipeline configuration. Essentially we can use one common pipeline to build all services, where each service can have its own build code in its Jenkinsfile. As usual, once the image is built, we push it to AWS ECR to be used later.

Deploying the image

The next goal is to actually deploy this built image to our staging cluster. By default, each service’s namespace hosts just one Deployment resource, corresponding to the stage branch. This Deployment resource looks something like this:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: iris

env: stage

namespace: iris

version: default

name: iris

namespace: iris

spec:

selector:

matchLabels:

app: iris

namespace: iris

version: default

template:

spec:

containers:

- env:

image: <docker-image-location>

imagePullPolicy: IfNotPresent

name: iris

An extremely truncated version of the Deployment resource for our microservice “iris”

The convenient thing about this resource is that it has a “version” field, which can uniquely identify it. Taking advantage of this, we add a new Deployment with modified “name” and “version” fields. As some would have noticed, this is extremely similar to a canary deployment!

So adding a microservice to a virtual cluster essentially translates to adding a new Deployment for that microservice, with a different name and version value (in our case, we use the name of the virtual cluster). Conversely, at any given point, a service’s namespace could contain multiple versions of that service, depending on the number of active virtual clusters which host that service.

$ kubectl config set-context --current --namespace iris

Context "curefit-stage" modified.

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

iris 1/1 1 1 149d

iris-test-cluster-one 1/1 1 1 6d

iris-test-cluster-two 1/1 1 1 3d

A service’s namespace can host multiple deployments, each belonging to a different virtual cluster

Routing traffic

The next obvious question that pops up is, how do we route traffic to a specific version? The answer lies within Istio! Istio offers a bunch of resources such as Virtual Services and Destination Rules, which we add to each of our microservice’s namespace. Quoting from Istio’s traffic management documentation, “You can think of virtual services as how you route your traffic to a given destination, and then you use destination rules to configure what happens to traffic for that destination.” These resources work in tandem to finely manage how traffic is routed within our k8s cluster.

To enable traffic to flow to a specific version, we first add a new Destination Rule corresponding to that version.

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: iris-test-cluster-one

namespace: iris

spec:

host: iris-internal.iris.svc.cluster.local

subsets:

- name: iris-test-cluster-one

labels:

app: iris

namespace: iris

version: iris-test-cluster-one

A destination rule for the “iris-test-cluster-one” version of iris’s deployment

Next up, we patch the Virtual Service to add a new header matching rule. We route requests to the appropriate destination based on the “virtual-cluster-name” header.

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: iris-internal

namespace: iris

spec:

gateways:

- iris-internal.istio-ingressgateway-internal.svc.cluster.local

hosts:

- iris-internal.iris.svc.cluster.local

- iris.stage.internal

http:

- match:

- uri:

prefix: /

headers:

virtual-cluster-name:

exact: iris-test-cluster-one

route:

- destination:

host: iris-internal.iris.svc.cluster.local

port:

number: 80

subset: iris-test-cluster-one

weight: 100

- match:

- uri:

prefix: /

route:

- destination:

host: iris-internal.iris.svc.cluster.local

port:

number: 80

subset: default

weight: 100

A Virtual Service for the service iris. There is a header matching rule which routes traffic to the “iris-test-cluster-one” version. The rest of the traffic is routed to the “default” stage version.

So let’s say requests to URL serviceA.stage.internal are routed to the “default” version of service A, adding a header “virtual-cluster-name: test-cluster” to the same request will now route the request to the “test-cluster” version of service A!

External requests vs Internal requests

So far, we have a solid system in place that can route requests to the correct version. However, the success of this solution hinges on the “virtual-cluster-name” header being present in the request; the source system making the request has to ensure that proper headers are in place.

If we try to categorise the requests based on their origin, they can be grouped into two kinds:

- External: Requests made from outside the cluster. For example, someone making requests directly to a service

- Internal: Requests made from one service to another, often in response to external requests

Modifying and adding headers to the external requests is easy since we’re in control of crafting them. However, we do need a solution for internal originating from deployed services. In line with the expected behaviour outlined in previous sections, requests made by a service hosted in “test-cluster” should be routed to the “test-cluster” version of other services.

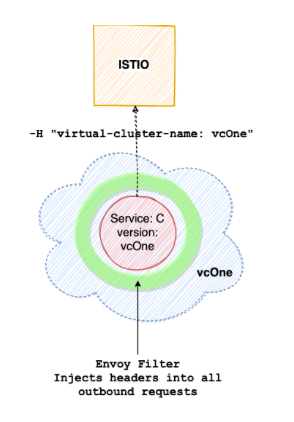

For instance, let’s assume there are two services B and C deployed in a virtual cluster “vcOne”. We expect all requests originating from application B to also contain the appropriate header so that they go to the correct version of the destination application (for instance, the “vcOne” version of service C)

To solve this, we add a new Istio resource called Envoy Filter for each service that is deployed to a virtual cluster. An Envoy Filter allows us to manipulate the incoming/outgoing requests for any application, without needing any code changes on the application.

Our particular Envoy Filter injects the “virtual-cluster-name” header in all outbound traffic. As a consequence, outbound requests from service B in “test-cluster” will also have the “virtual-cluster-name: test-cluster” header, and hence will be routed correctly by Istio.

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: iris-test-cluster-one-header-injector

namespace: iris

spec:

configPatches:

- applyTo: HTTP_FILTER

match:

context: SIDECAR_OUTBOUND

listener:

filterChain:

filter:

name: envoy.http_connection_manager

subFilter:

name: envoy.router

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.http.lua

typed_config:

'@type': type.googleapis.com/envoy.extensions.filters.http.lua.v3.Lua

inline_code: |

function envoy_on_request(request_handle)

request_handle:headers():replace("virtual-cluster-name", "iris-test-cluster-one")

end

workloadSelector:

labels:

version: iris-test-cluster-one

An Envoy Filter for “iris-test-cluster-one” version of iris. It will intercept all requests coming from the “iris-test-cluster-one” version iris pods and inject a header "virtual-cluster-name: iris-test-cluster-one"

Routing internal requests from default stage versions

Often we need to test flows in a service that get invoked by a different service. For instance, let’s say the user service calls the SMS service to send OTPs whenever a user tries to log in. If we want to test the entire login flow after making some changes to the SMS service, we need to test both the user service as well as the SMS service in conjunction.

The addition of an Envoy Filter does give us a complete solution. We could simply deploy both the services to a virtual cluster, and depend on the Envoy Filter to inject the correct header (which would ensure correct routing). But deploying the user service to our virtual cluster seems wasteful, given that there are no changes to it. We could simply make use of its default stage version, if the request it makes to the SMS service somehow contains the correct header!

To achieve this, we made changes to stage services such that they “preserve” the “virtual-cluster-name” header. i.e. if a request to a service contains this header, all requests made as a result of that request will also have the same header. Most of our services make use of either Spring Boot or Express; for Spring Boot services we used Spring Sleuth’s propagation keys, and CLS-hooked for Express services.

One should note here though, that this does not make the Envoy Filter redundant. Header preservation works only if there is an initial external request which contains the header. For example, if we’re testing changes in a service that runs an internal cron (which makes a bunch of requests), we won’t have an external “trigger” request. In such a case, Envoy Filter is necessary to inject the header.

Dealing with service misses

One unanswered question is, what happens if we observe a service-miss? i.e., if we make a request to service Z with the “virtual-cluster-name” header set as “test-cluster”, but “test-cluster” does not host service Z? In such a case, service Z’s Virtual Service resource will not have a header matching rule corresponding to the “test-cluster” (since we add it only when a service is deployed to a VC). Consequently, the request will be routed to the “default” staging version of service Z.

Productionising the POC

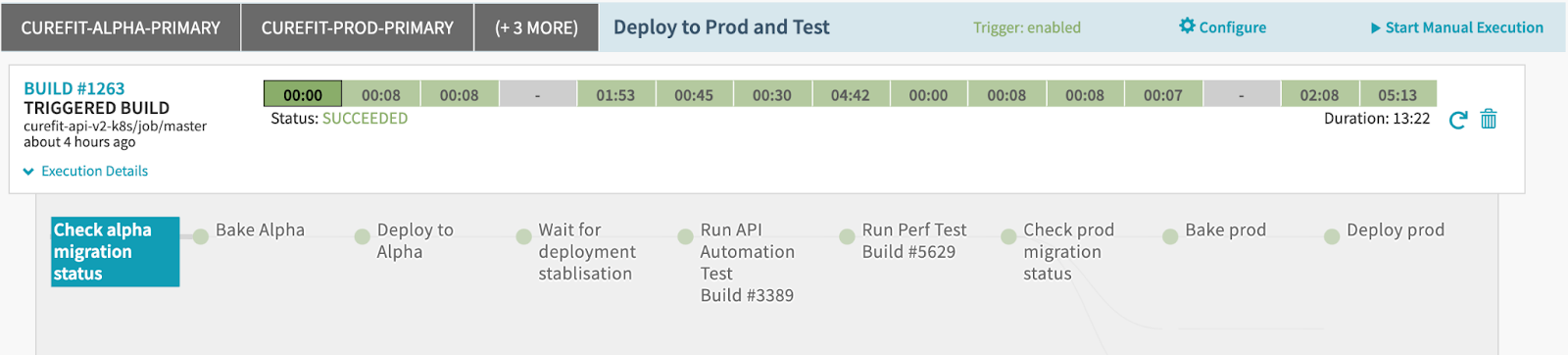

As we can see above, there are quite a few steps required for our solution to work; certainly not something we can expect someone to do every time they wish to test their changes. We wanted this solution to be as simple as possible to use for the tech team. Ultimately, we decided to build a layer (which we fondly called Echidna, the mother of all monsters) that would coordinate among the different components, and hide all of it behind an easy to use dashboard. Here’s a fifty thousand foot view:

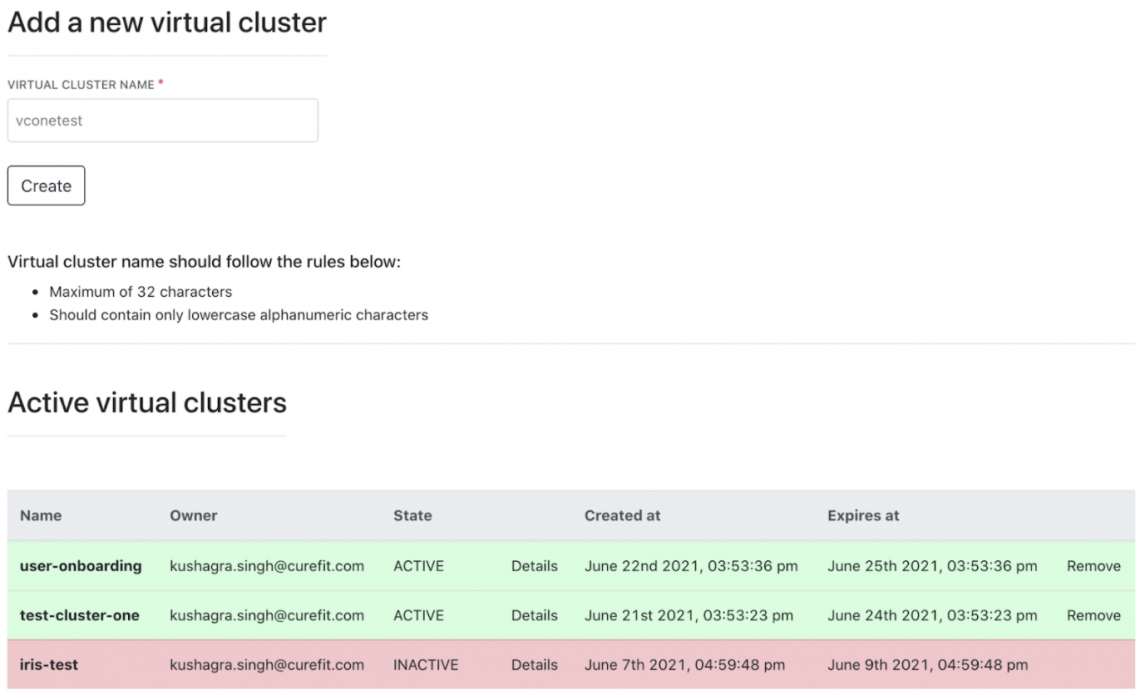

Echidna offers a user interface that allows creation of new virtual clusters, viewing existing clusters, removing them, and so forth; essentially, all CRUD operations. Having this dashboard also made it very easy to track and audit virtual clusters and share them with other members of the tech team.

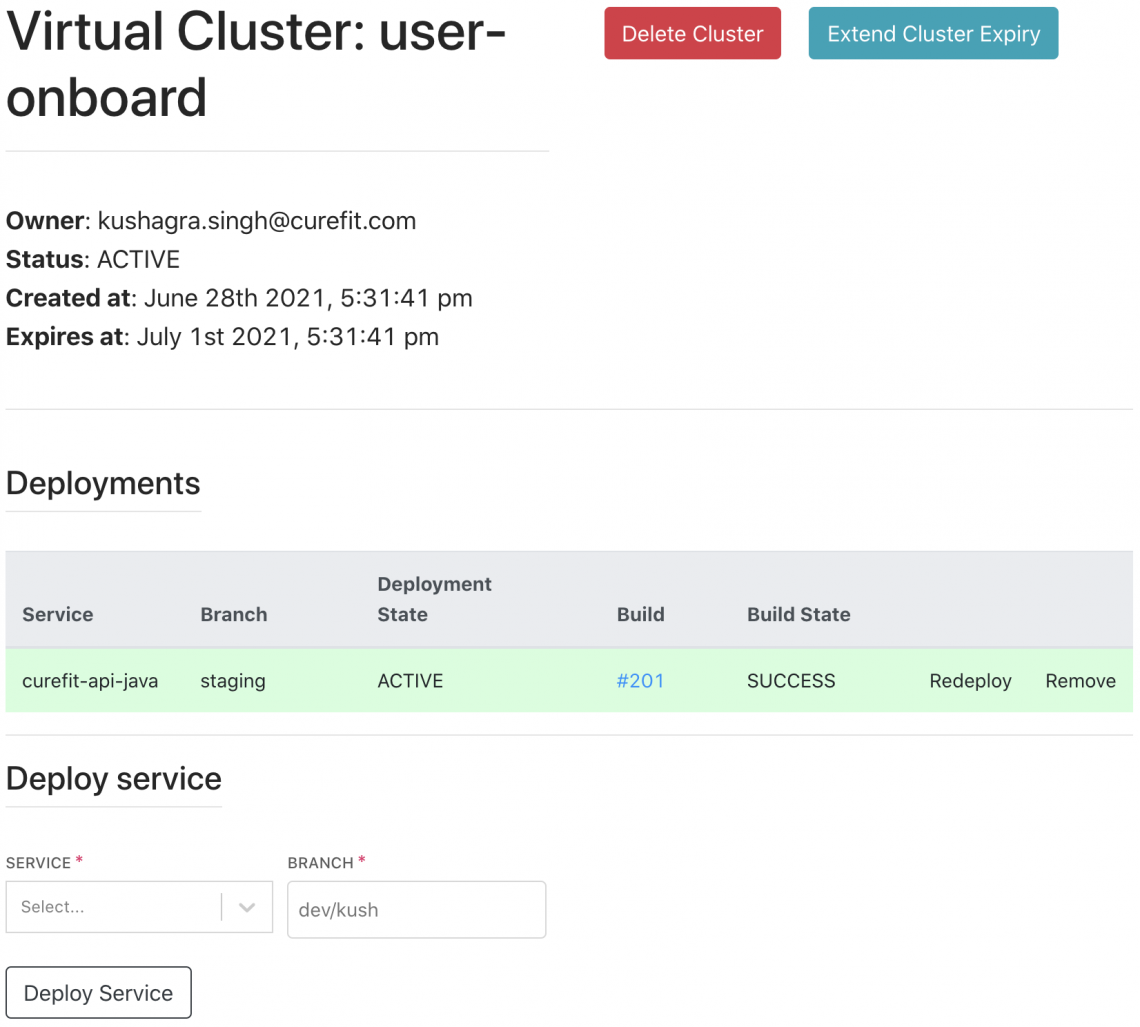

This dashboard also made it possible to allow for “single click deployments”; each virtual cluster has its own page from where this can be done. Internally, Echidna coordinates with Jenkins and Spinnaker to build and deploy images to the stage cluster.

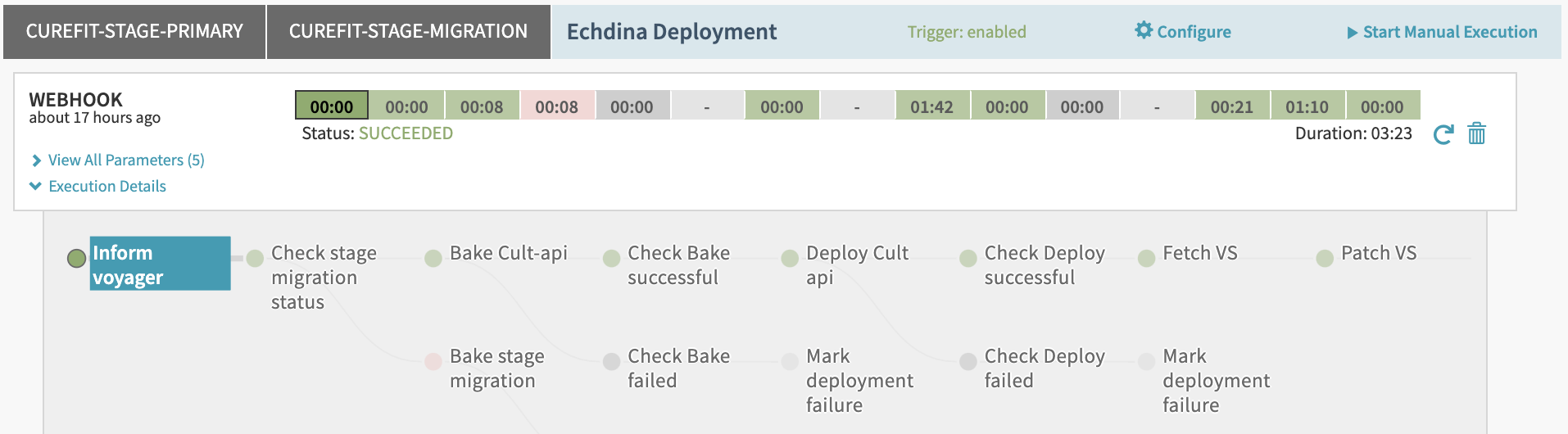

Behind the scenes whenever a new service is added to a virtual cluster, Echidna triggers a Jenkins build. As soon as the build makes a successful callback, Echidna triggers a deploy pipeline. This pipeline is almost identical to our stage deployment pipeline, but with one additional step which patches the Virtual Service resource (to add an appropriate header matching rule).

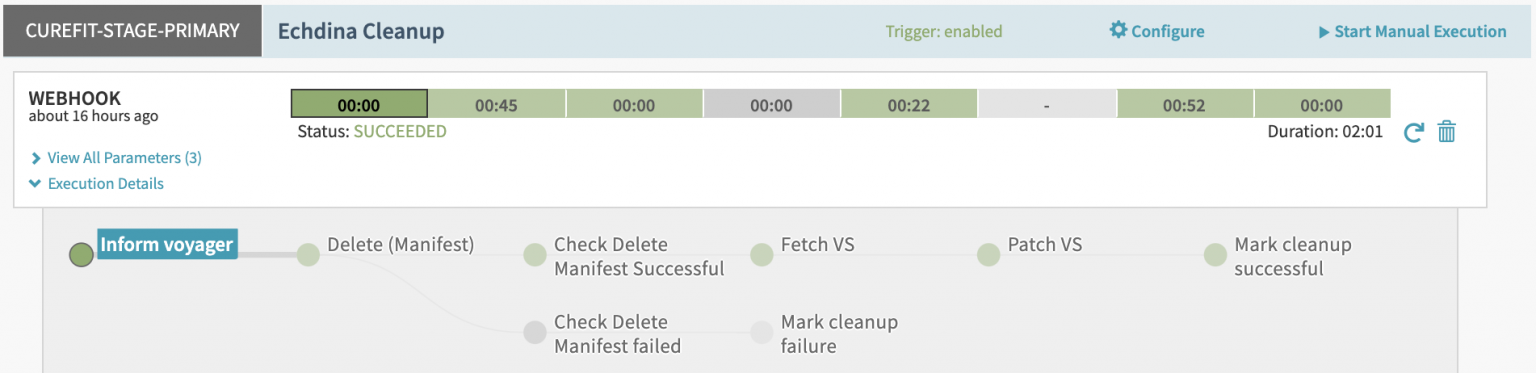

Similarly, whenever a service is removed from a virtual cluster, we execute a cleanup pipeline. This deletes all the pertinent resources (Deployment, Envoy Filter, etc.), and patches the Virtual Service again to remove the header matching rule for this version of the service.

Advantages of a stateful service

Apart from aiding usability and visibility, having a stateful service to manage virtual clusters has other benefits as well. Given that the success of our solution hinges on correctly modifying the Virtual Service, the ability to keep track of all virtual clusters which host a given service is a boon. Our Spinnaker pipelines fetch the patch to be applied directly from Echidna, which keeps track of the active version of the service.

Additionally, one concern that we had in the beginning was resources being wasted due to virtual clusters which are never removed. Having a stateful service helped mitigate this as well, since we could model certain properties around each virtual cluster (such as cluster Time To Live), and take certain actions based on that (cleaning up a cluster when TTL reaches 0).

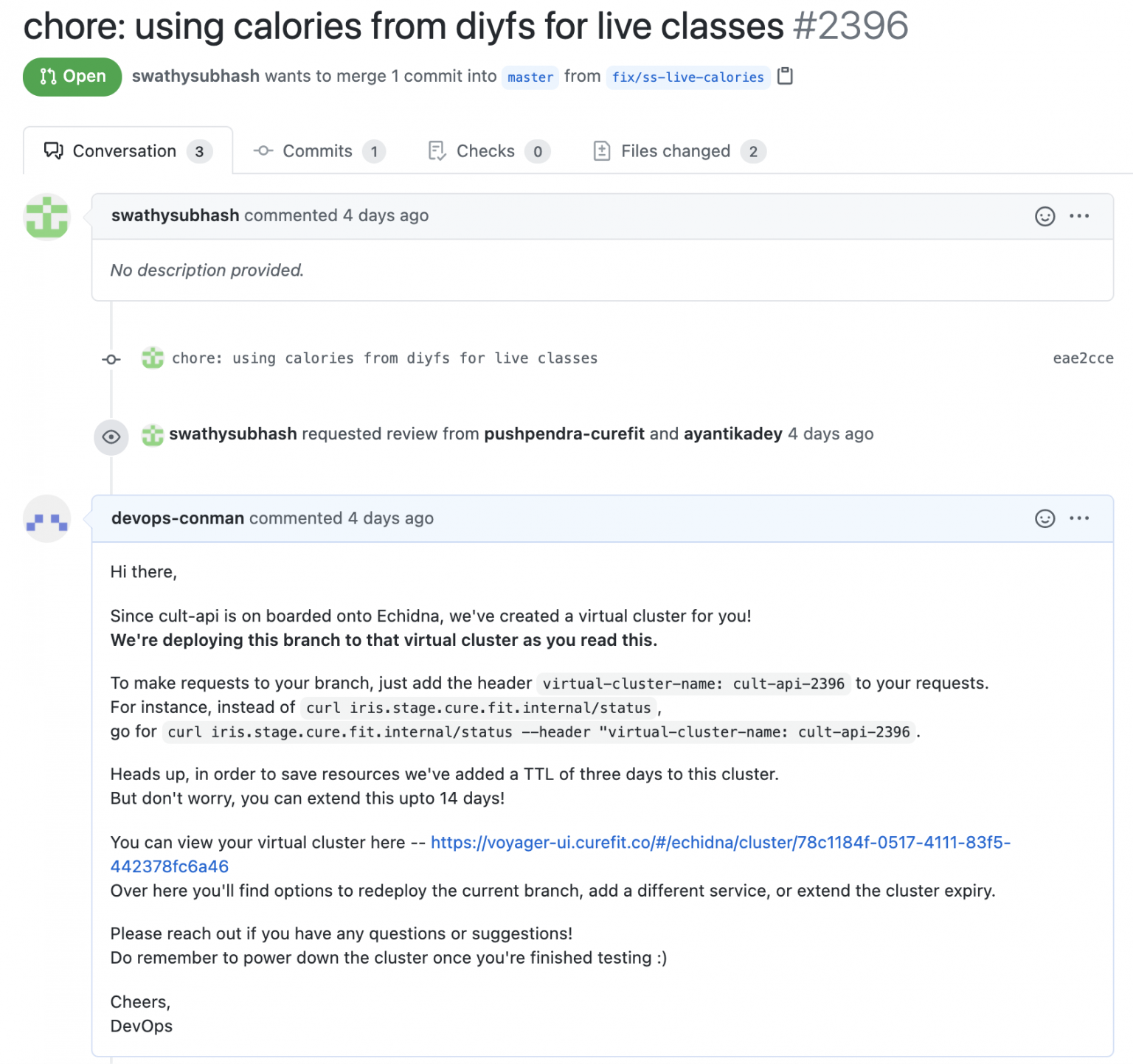

Auto deploying using Github webhooks

To further ease testing, we integrated Github Webhooks with Echidna. This eliminates the mandatory “single click” as well 😃. Every time a new pull request is raised against the master branch, we auto-create a virtual cluster and deploy the development branch to it.

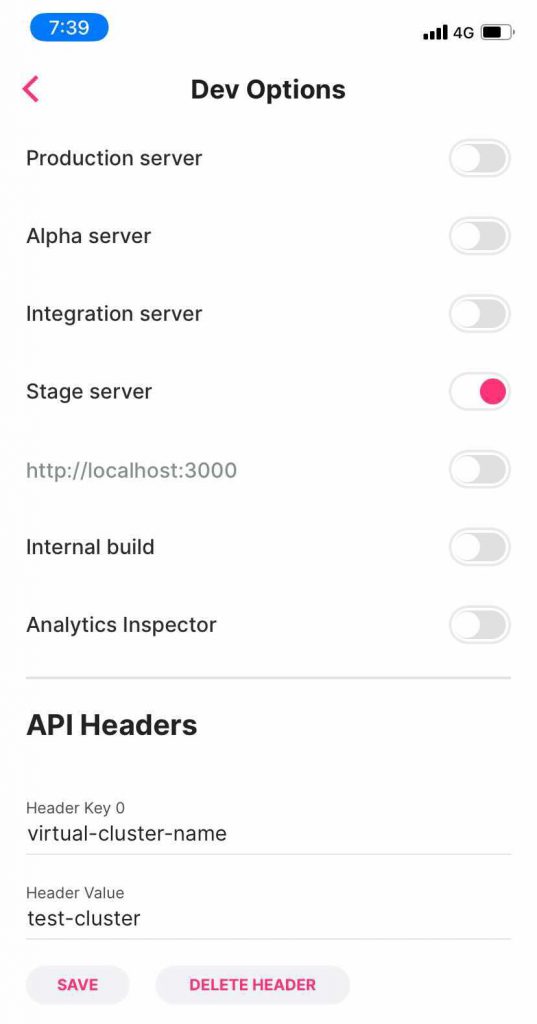

Testing new features on our mobile app and web

Another important use case that we saw was testing new features on our mobile application. Since our entire routing is based on header matching rules, it became extremely easy to integrate this as well!

Our mobile application allows injecting headers to outbound requests, which makes it possible to point the app to a certain virtual cluster[/caption]

We added functionality to our app which allows injecting headers to all outbound requests. By doing so, we were able to “point” our app to different code bases in an extremely quick and easy manner. Similarly, all web-based applications simply use a browser extension to inject the appropriate header, to test a different version of their code.

Conclusion

Our current solution achieves most of what we set out to achieve — it gives the developers freedom to deploy their changes without having to worry about affecting others. Consequently, it makes the staging more reliable as well. However, there are a few open challenges which are yet to be solved.

Probably the most daunting of them all is asynchronous communication. Our current implementation does well to route HTTP traffic to correct destinations, but does not isolate any pub-sub models that a service might be using. As a consequence, each “version” of a service will end up using the same queue for publishing and consuming messages.

One potential fix is to host both services (i.e. the producer and the consumer) in a virtual cluster, and make them publish and consume to/from a different queue specifically created for that virtual cluster. However, this has quite a bit of an overhead, and would require code changes in individual microservices as well; not ideal. Another less intrusive solution would involve injecting the virtual cluster name into the published message itself and consuming messages intelligently based on some attribute filtering.

Another issue is data isolation. Presently, all deployed versions of a service use the same datastores. Ideally each virtual cluster should “clone” data from a pristine source, and modify only its own working set. This would preclude any side effects that might creep in due to another version working on the same set of data; for instance, a service running a cron which takes some action based on the keys set in Redis. Furthermore, sharing datastores also makes testing database migrations impossible.

Despite these caveats, our first version works well for most use cases. As a long term goal, we are aiming at discouraging manual stage deployments altogether, and instead syncing staging services from production at regular intervals. However, there’s a long road ahead. If working on cutting edge infra and solving tricky problems excites you, come join us!

Credits -- Kush, Adithya, and Vikram